QEMU monitor savevm loadvm源代码分析

源代码版本:git://git.qemu.org/qemu.git v2.5.0

savevm指令对应的函数是hmp_savevm,loadvm则是hmp_loadvm, 对应的函数是

{

.name = "savevm",

.args_type = "name:s",

.params = "[tag|id]",

.help = "save a VM snapshot. If no tag or id are provided, a new snapshot is created",

.mhandler.cmd = hmp_savevm,

},

{

.name = "loadvm",

.args_type = "name:s",

.params = "tag|id",

.help = "restore a VM snapshot from its tag or id",

.mhandler.cmd = hmp_loadvm,

.command_completion = loadvm_completion,

},上面这个代码是编译生成的,在编译目录下面的x86_64-softmmu/hmp-commands.h。

先看hmp_savevm,在qemu monitor console下执行savevm oenhan指令,在hmp_savevm中,const char *name = qdict_get_try_str(qdict, "name")获取的就是oenhan这个名称,bdrv_all_can_snapshot和bdrv_all_delete_snapshot都是针对不支持snapshot的文件格式如raw或者snapshot的同名处理。

qemu_fopen_bdrv生成了填充了QEMUFile结构体,结构体如下:

struct QEMUFile {

const QEMUFileOps *ops;

void *opaque;

int64_t bytes_xfer;/*按byte写了多少次*/ int64_t xfer_limit;/*File写入的次数限制,bytes_xfer不能超过此参数*/ int64_t pos; /* 当前的文件指针位置,但是没有被使用 */ int buf_index; /*标记buf数组的末尾索引*/ int buf_size; /* 0 when writing */ uint8_t buf[IO_BUF_SIZE];/*读写内容都缓冲到这里*/ struct iovec iov[MAX_IOV_SIZE];

unsigned int iovcnt;

int last_error;/*上次读写有没有出错,如果有,当前的读写估计都会被禁止*/};而实际上qemu_fopen_bdrv此刻做的事情等同于QEMUFile.ops=bdrv_write_ops,QEMUFile.opaque=bs,初始化填充而已。

下面的qemu_savevm_state是主要函数,qemu_savevm_state开头初始化了很多Migration的结构体函数本质就是使用了migration的那套获取信息的机制。migrate_init填充MigrationState,但貌似ms没有作用。再往下则是qemu_savevm_state_header函数,qemu_put_be32负责给QEMUFile写入内容,就是使用qemu_put_byte按byte写四次,具体写的过程对照QEMUFile里面的注释就很清楚了。因为savevm_state.skip_configuration是空,则看vmstate_save_state,这里又引入了另外一个结构体VMStateDescription

typedef struct {

const char *name; /*一般都是针对具体名称的字符化*//*计算非常复杂,在VMSTATE_UINTTL(env.eip, X86CPU) 初始化中

*则相当于eip到X86CPU的offset。

*/ size_t offset;

size_t size; /*被保存的单个数据的长度*/ size_t start;

int num; /*一般为1,如果是保存的数据是数组可能>1*/ size_t num_offset;

size_t size_offset;

const VMStateInfo *info;

enum VMStateFlags flags;

const VMStateDescription *vmsd;

int version_id;

bool (*field_exists)(void *opaque, int version_id);

} VMStateField;

struct VMStateDescription {

const char *name; /*标记名字*/ int unmigratable; /*数据是否支持迁移*/ int version_id; /*主要用来检查是否兼容的*/ int minimum_version_id;

int minimum_version_id_old;

LoadStateHandler *load_state_old;

int (*pre_load)(void *opaque); /*不同场景下的回调函数*/ int (*post_load)(void *opaque, int version_id);

void (*pre_save)(void *opaque);

bool (*needed)(void *opaque);

/*数据主要存放到这里,以数组结构体的形式存在*/ VMStateField *fields;

const VMStateDescription **subsections; /*子模块*/};进入到vmstate_save_state,第一句就是获取vmsd->fields,也就是VMStateField,结构体代码如上,实际初始化内容如下

static const VMStateDescription vmstate_configuration = {

.name = "configuration",

.version_id = 1,

.post_load = configuration_post_load,

.pre_save = configuration_pre_save,

.fields = (VMStateField[]) {

VMSTATE_UINT32(len, SaveState), //准备获取SaveState下的len值

//准备获取SaveState下的name值

VMSTATE_VBUFFER_ALLOC_UINT32(name, SaveState, 0, NULL, 0, len),

VMSTATE_END_OF_LIST()

},

};VMStateField这几个宏特别复杂,有兴趣的看一下,gdb获取的结果如下

(gdb) p *(vmstate_configuration.fields)

$9 = {name = 0x555555b27100 "len", offset = 24, size = 4, start = 0, num = 0, num_offset = 0, size_offset = 0, info = 0x555555f6e4b0 <vmstate_info_uint32>, flags = VMS_SINGLE, vmsd = 0x0, version_id = 0, field_exists = 0x0}

继续,vmsd->pre_save(opaque)的执行也就是的执行,本质是在savevm_state结构体里面

typedef struct SaveState {

QTAILQ_HEAD(, SaveStateEntry) handlers;

int global_section_id;

bool skip_configuration;

uint32_t len;

const char *name;

} SaveState;给name赋值,这个值是qemu当前采用的机器架构MACHINE_GET_CLASS(current_machine)->name是pc-i440fx-2.5。configuration_pre_save就是获取硬件架构模型的名字。vmdesc是0,忽略对应的处理。

field->name有值,field->field_exists为空,下面是base_addr等变量的赋值,base_addr = opaque + field->offset,这是base_addr指向的就是我们在SaveState里面准备获取的信息len,n_elems = vmstate_n_elems(opaque, field)则对于数组才有意义,size = vmstate_size(opaque, field)则是单个参数的长度,在下面的循环中

/*循环一般只有一次*/for (i = 0; i < n_elems; i++) {

void *addr = base_addr + size * i;

/*vmsd_desc_field_start因为vmdesc_loop为空直接return*/ vmsd_desc_field_start(vmsd, vmdesc_loop, field, i, n_elems);

//快速获取当前的offset

old_offset = qemu_ftell_fast(f);

if (field->flags & VMS_ARRAY_OF_POINTER) {

addr = *(void **)addr;

}

if (field->flags & VMS_STRUCT) {

vmstate_save_state(f, field->vmsd, addr, vmdesc_loop);

} else {

//指向put,具体函数则是put_uint32

field->info->put(f, addr, size);

}

written_bytes = qemu_ftell_fast(f) - old_offset;

vmsd_desc_field_end(vmsd, vmdesc_loop, field, written_bytes, i);

/* Compressed arrays only care about the first element */ if (vmdesc_loop && vmsd_can_compress(field)) {

vmdesc_loop = NULL;

}

}很容易可以分析出核心函数是put_uint32,前面说过了具体流程,略过。回来看vmstate_save_state(f, &vmstate_configuration, &savevm_state, 0)这句,它根据vmstate_configuration的配置将savevm_state下的两个参数拷贝到qemufile里面。此刻qemu_savevm_state_header结束。

进入qemu_savevm_state_begin函数,begin整个函数都是对savevm_state.handlers函数的的遍历执行,QTAILQ_FOREACH(se, &savevm_state.handlers, entry),那么回头看savevm_state.handlers,handlers只是一个头指针指向SaveStateEntry的结构链表,也就是se,

typedef struct SaveStateEntry {

QTAILQ_ENTRY(SaveStateEntry) entry;

char idstr[256];

int instance_id;

int alias_id;

int version_id;

int section_id;

SaveVMHandlers *ops;

const VMStateDescription *vmsd;

void *opaque;

CompatEntry *compat;

int is_ram;

} SaveStateEntry;执行se->ops的函数,具体执行内容先不看,关注点在savevm_state.handlers的函数是是什么时候挂上去的,如何初始化的,记住ops的类型是SaveVMHandlers。

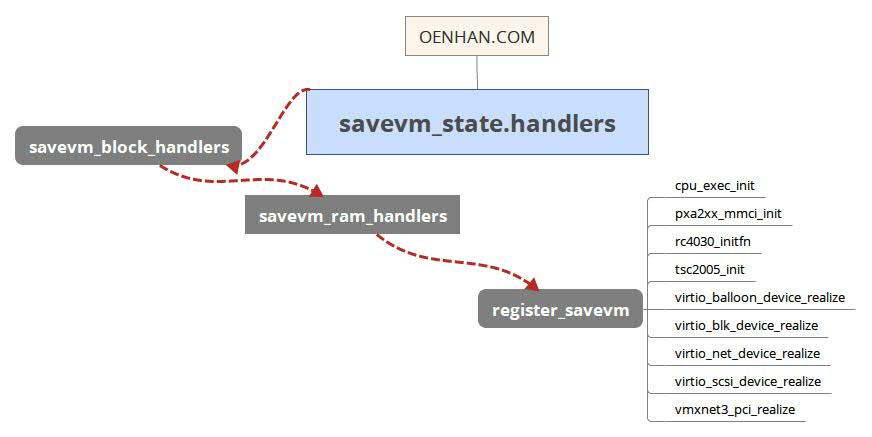

先从qemu启动main函数看起,有blk_mig_init和ram_mig_init,它们都调用了register_savevm_live函数,倒数的两个参数分别是SaveVMHandlers *ops和void *opaque,

int register_savevm_live(DeviceState *dev,

const char *idstr,

int instance_id,

int version_id,

SaveVMHandlers *ops,

void *opaque)

{

SaveStateEntry *se; //正是savevm_state.handlers的对象

se = g_new0(SaveStateEntry, 1);

se->version_id = version_id;

se->section_id = savevm_state.global_section_id++;

se->ops = ops; //ops在此处赋值

se->opaque = opaque; //操作的对象即是

se->vmsd = NULL;

//.....代码有省略...

/* add at the end of list */ QTAILQ_INSERT_TAIL(&savevm_state.handlers, se, entry); //就是在此处插入的对象结构体

return 0;

}其中blk_mig_init传入的ops是savevm_block_handlers

static SaveVMHandlers savevm_block_handlers = {

.set_params = block_set_params,

.save_live_setup = block_save_setup,

.save_live_iterate = block_save_iterate,

.save_live_complete_precopy = block_save_complete,

.save_live_pending = block_save_pending,

.load_state = block_load,

.cleanup = block_migration_cleanup,

.is_active = block_is_active,

};register_savevm_live是注册函数的核心,查看它的调用函数即发现调用轨迹,一个很重要的函数是register_savevm,它被其他调用的更多。

int register_savevm(DeviceState *dev,

const char *idstr,

int instance_id,

int version_id,

SaveStateHandler *save_state,

LoadStateHandler *load_state,

void *opaque)

{ // SaveVMHandlers直接初始化两个函数,然后整个就挂入钩子中

SaveVMHandlers *ops = g_new0(SaveVMHandlers, 1);

ops->save_state = save_state; // 入参函数

ops->load_state = load_state; // 入参函数

return register_savevm_live(dev, idstr, instance_id, version_id,

ops, opaque);

}调用函数以cpu_exec_init为例,传入的函数是cpu_save和cpu_load,整体就是保存和加载CPU信息的。

register_savevm(NULL, "cpu", cpu_index, CPU_SAVE_VERSION,

cpu_save, cpu_load, cpu->env_ptr);

savevm_state.handlers就是一个链表,如果有新增的模拟设备需要保存,则将自己的save/load函数挂到handlers上去就可以执行了,整个链表如上图,qemu中有更多没有详细列出,自己看代码就可以了。

回到qemu_savevm_state_begin函数,se->ops->set_params实际上执行的就一个函数block_set_params,其他的ops都没有赋值

(gdb) p *params

$4 = {blk = false, shared = false}

static void block_set_params(const MigrationParams *params, void *opaque)

{

block_mig_state.blk_enable = params->blk;

block_mig_state.shared_base = params->shared;

/* shared base means that blk_enable = 1 */ block_mig_state.blk_enable |= params->shared;

}实际对比一下入参params和block_set_params函数也清楚看出params就是适配给block_set_params,如果handlers配置了其他函数,反而可能有其他问题。

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

if (!se->ops || !se->ops->save_live_setup) {

continue;

}

if (se->ops && se->ops->is_active) {

if (!se->ops->is_active(se->opaque)) {

continue;

}

}

save_section_header(f, se, QEMU_VM_SECTION_START);

ret = se->ops->save_live_setup(f, se->opaque);

save_section_footer(f, se);

if (ret < 0) {

qemu_file_set_error(f, ret);

break;

}

}

在上面的循环里面,第一个被执行的是savevm_block_handlers,但是因为se->ops->is_active(se->opaque)为false,值是前面set_param赋值设定的。其后是savevm_ram_handlers,se->ops->is_active在后续的handlers函数中都是空了,走到save_section_header save header信息,执行se->ops->save_live_setup函数,即是ram_save_setup,内存的处理是重点,往下看有ram_save_iterate和ram_save_complete,后面再提。

ram_save_setup中migration_bitmap_sync_init和reset_ram_globals初始化全局变量,migrate_use_xbzrle为false直接忽略中间处理过程,last_ram_offset则获取ram的最大偏移,ram的内容参考“KVM源代码分析4:内存虚拟化”,而ram_bitmap_pages则是qemu虚拟的内存空间需要占用host的page个数,bitmap_new为migration_bitmap_rcu->bmap分配位图并初始化为0,migration_bitmap_rcu结构如下:

static struct BitmapRcu {

struct rcu_head rcu;

/* Main migration bitmap */ unsigned long *bmap;

/* bitmap of pages that haven't been sent even once

* only maintained and used in postcopy at the moment

* where it's used to send the dirtymap at the start

* of the postcopy phase

*/ unsigned long *unsentmap;

} *migration_bitmap_rcu;因为只是本地save,migration_bitmap_rcu->unsentmap明显没有使用,后面也就跳过了,migration_dirty_pages则是ram使用了多少个page。

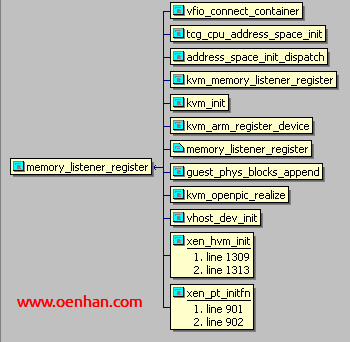

进入memory_global_dirty_log_start,MEMORY_LISTENER_CALL_GLOBAL回调MemoryListener的函数,

#define MEMORY_LISTENER_CALL_GLOBAL(_callback, _direction, _args...) \

do { \

MemoryListener *_listener; \

\

switch (_direction) { \

case Forward: \

//这个循环和前面的基本一个思路

QTAILQ_FOREACH(_listener, &memory_listeners, link) { \

if (_listener->_callback) { \

_listener->_callback(_listener, ##_args); \

} \

} \

break; \

case Reverse: \

QTAILQ_FOREACH_REVERSE(_listener, &memory_listeners, \

memory_listeners, link) { \

if (_listener->_callback) { \

_listener->_callback(_listener, ##_args); \

} \

} \

break; \

default: \

abort(); \

} \

} while (0)

MemoryListener注册到memory_listeners是通过memory_listener_register实现的,对应的注册函数还是很多的,如上图,但是我们只关注

kvm_memory_listener_register(s, &s->memory_listener,

&address_space_memory, 0);

memory_listener_register(&kvm_io_listener,

&address_space_io);重点还是address_space_memory,但实际上MEMORY_LISTENER_CALL_GLOBAL回调的是log_global_start,而log_global_start对于address_space_memory没有初始化,所以跳过MEMORY_LISTENER_CALL_GLOBAL即可。

memory_region_transaction_begin只在kvm_enabled的时候有用,address_space_memory在address_space_init初始化,address_space_init_dispatch初始化了回调函数,

as->dispatch_listener = (MemoryListener) {

.begin = mem_begin,

.commit = mem_commit,

.region_add = mem_add,

.region_nop = mem_add,

.priority = 0,

};下面的代码则是调用mem_begin和mem_commit,以及稍后的migration_bitmap_sync,都是内存正在写的同步操作,后面再说。

下面就是一些保存ram_list.blocks的数据。

qemu_put_be64(f, ram_bytes_total() | RAM_SAVE_FLAG_MEM_SIZE);

QLIST_FOREACH_RCU(block, &ram_list.blocks, next) {

qemu_put_byte(f, strlen(block->idstr));

qemu_put_buffer(f, (uint8_t *)block->idstr, strlen(block->idstr));

qemu_put_be64(f, block->used_length);

}ram_control_before_iterate和ram_control_after_iterate都是空执行,f->ops即bdrv_write_ops,before_ram_iterate和after_ram_iterate都是NULL,此时ram_save_setup执行完毕。

退回到qemu_savevm_state_begin函数,但此处在save_live_setup执行中,主要任务就是block和ram,此时执行完毕。

退回到qemu_savevm_state函数,则执行qemu_savevm_state_iterate函数,在qemu_savevm_state_iterate中,只是在执行se->ops->save_live_iterate回调函数,也就是savevm_block_handlers和savevm_ram_handlers中的ram_save_iterate和block_save_iterate,

static SaveVMHandlers savevm_block_handlers = {

.set_params = block_set_params,

.save_live_setup = block_save_setup,

.save_live_iterate = block_save_iterate,

.save_live_complete_precopy = block_save_complete,

.save_live_pending = block_save_pending,

.load_state = block_load,

.cleanup = block_migration_cleanup,

.is_active = block_is_active,

};

static SaveVMHandlers savevm_ram_handlers = {

.save_live_setup = ram_save_setup,

.save_live_iterate = ram_save_iterate,

.save_live_complete_postcopy = ram_save_complete,

.save_live_complete_precopy = ram_save_complete,

.save_live_pending = ram_save_pending,

.load_state = ram_load,

.cleanup = ram_migration_cleanup,

};在ram_save_iterate中,ram_control_before_iterate无作用,qemu_file_rate_limit控制QEMUFile的写入速度,进入到ram_find_and_save_block函数,先跳过不提,它的任务就是找到脏页并把它发送给QEMUFile,ram_find_and_save_block函数是针对每个ram_list.blocks进行处理,到flush_compressed_data前内存的脏页都刷新到QEMUFile,而flush_compressed_data负责内容压缩,不提。那么,ram_save_iterate就执行完成。

返回到qemu_savevm_state_iterate函数,继续返回到qemu_savevm_state函数。这样子基本上SAVEVM的过程就完成了,但是很奇怪的是,savevm常识是block作为硬盘需要save(虽然我觉得也不需要,因为和migration共用一套代码才有),savevm_block_handlers搞定硬盘信息,savevm_ram_handlers搞定内存保存信息,那么CPU寄存器是谁来搞定?

因为漏掉了一部分,在前面提到“调用函数以cpu_exec_init为例,传入的函数是cpu_save和cpu_load,整体就是保存和加载CPU信息的”,register_savevm注册的时候,opaque参数是cpu->env_ptr,这个指针在不同的架构下赋值不同,在x86下赋值是(CPUX86State *env = &cpu->env);

直接上码,干干净净

void cpu_save(QEMUFile *f, void *opaque)

{

CPUSPARCState *env = opaque;

int i;

uint32_t tmp;

// if env->cwp == env->nwindows - 1, this will set the ins of the last

// window as the outs of the first window

cpu_set_cwp(env, env->cwp);

for(i = 0; i < 8; i++)

qemu_put_betls(f, &env->gregs[i]);

qemu_put_be32s(f, &env->nwindows);

for(i = 0; i < env->nwindows * 16; i++)

qemu_put_betls(f, &env->regbase[i]);

/* FPU */ for (i = 0; i < TARGET_DPREGS; i++) {

qemu_put_be32(f, env->fpr[i].l.upper);

qemu_put_be32(f, env->fpr[i].l.lower);

}

qemu_put_betls(f, &env->pc);

qemu_put_betls(f, &env->npc);

qemu_put_betls(f, &env->y);

tmp = cpu_get_psr(env);

qemu_put_be32(f, tmp);

qemu_put_betls(f, &env->fsr);

qemu_put_betls(f, &env->tbr);

tmp = env->interrupt_index;

qemu_put_be32(f, tmp);

qemu_put_be32s(f, &env->pil_in);

#ifndef TARGET_SPARC64

qemu_put_be32s(f, &env->wim);

/* MMU */ for (i = 0; i < 32; i++)

qemu_put_be32s(f, &env->mmuregs[i]);

for (i = 0; i < 4; i++) {

qemu_put_be64s(f, &env->mxccdata[i]);

}

for (i = 0; i < 8; i++) {

qemu_put_be64s(f, &env->mxccregs[i]);

}

qemu_put_be32s(f, &env->mmubpctrv);

qemu_put_be32s(f, &env->mmubpctrc);

qemu_put_be32s(f, &env->mmubpctrs);

qemu_put_be64s(f, &env->mmubpaction);

for (i = 0; i < 4; i++) {

qemu_put_be64s(f, &env->mmubpregs[i]);

}

#else

qemu_put_be64s(f, &env->lsu);

for (i = 0; i < 16; i++) {

qemu_put_be64s(f, &env->immuregs[i]);

qemu_put_be64s(f, &env->dmmuregs[i]);

}

for (i = 0; i < 64; i++) {

qemu_put_be64s(f, &env->itlb[i].tag);

qemu_put_be64s(f, &env->itlb[i].tte);

qemu_put_be64s(f, &env->dtlb[i].tag);

qemu_put_be64s(f, &env->dtlb[i].tte);

}

qemu_put_be32s(f, &env->mmu_version);

for (i = 0; i < MAXTL_MAX; i++) {

qemu_put_be64s(f, &env->ts[i].tpc);

qemu_put_be64s(f, &env->ts[i].tnpc);

qemu_put_be64s(f, &env->ts[i].tstate);

qemu_put_be32s(f, &env->ts[i].tt);

}

qemu_put_be32s(f, &env->xcc);

qemu_put_be32s(f, &env->asi);

qemu_put_be32s(f, &env->pstate);

qemu_put_be32s(f, &env->tl);

qemu_put_be32s(f, &env->cansave);

qemu_put_be32s(f, &env->canrestore);

qemu_put_be32s(f, &env->otherwin);

qemu_put_be32s(f, &env->wstate);

qemu_put_be32s(f, &env->cleanwin);

for (i = 0; i < 8; i++)

qemu_put_be64s(f, &env->agregs[i]);

for (i = 0; i < 8; i++)

qemu_put_be64s(f, &env->bgregs[i]);

for (i = 0; i < 8; i++)

qemu_put_be64s(f, &env->igregs[i]);

for (i = 0; i < 8; i++)

qemu_put_be64s(f, &env->mgregs[i]);

qemu_put_be64s(f, &env->fprs);

qemu_put_be64s(f, &env->tick_cmpr);

qemu_put_be64s(f, &env->stick_cmpr);

cpu_put_timer(f, env->tick);

cpu_put_timer(f, env->stick);

qemu_put_be64s(f, &env->gsr);

qemu_put_be32s(f, &env->gl);

qemu_put_be64s(f, &env->hpstate);

for (i = 0; i < MAXTL_MAX; i++)

qemu_put_be64s(f, &env->htstate[i]);

qemu_put_be64s(f, &env->hintp);

qemu_put_be64s(f, &env->htba);

qemu_put_be64s(f, &env->hver);

qemu_put_be64s(f, &env->hstick_cmpr);

qemu_put_be64s(f, &env->ssr);

cpu_put_timer(f, env->hstick);

#endif

}前面写错了一部分,哪里呢?CPU的save和load部分是有问题的,是因为

#if defined(CPU_SAVE_VERSION) && !defined(CONFIG_USER_ONLY)

register_savevm(NULL, "cpu", cpu_index, CPU_SAVE_VERSION,

cpu_save, cpu_load, cpu->env_ptr);

assert(cc->vmsd == NULL);

assert(qdev_get_vmsd(DEVICE(cpu)) == NULL);

#endif具体的register_savevm代码是被defined处理掉的,所以cpu_save和cpu_load也是不存在,之前说错的部分也没有删除,如果你不清楚哪错了,算你倒霉。

那这个活谁来干呢,总是脱不了savevm_state.handlers,而实际上handlers的注册还有另外一个函数,vmstate_register_with_alias_id,但是它没有注册ops

se = g_new0(SaveStateEntry, 1);

se->version_id = vmsd->version_id;

se->section_id = savevm_state.global_section_id++;

se->opaque = opaque;

se->vmsd = vmsd;

se->alias_id = alias_id;只有vmsd而已,这样在qemu_savevm_state_begin中的循环是不生效的,调用vmstate_register_with_alias_id是vmstate_register即是cpu_exec_init中的

if (cc->vmsd != NULL) {

vmstate_register(NULL, cpu_index, cc->vmsd, cpu);

}其中cc->vmsd就是vmstate_x86_cpu,此刻与CPUX86State带上了联系,但真正生效是在qemu_savevm_state中的qemu_savevm_state_complete_precopy,马上就可以收摊走人的时候,在qemu_savevm_state_complete_precopy中,前一个QTAILQ_FOREACH(se, &savevm_state.handlers, entry)循环因为se->ops直接可以跳过去,在第二个循环里面

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

//重点在第三个判断

if ((!se->ops || !se->ops->save_state) && !se->vmsd) {

continue;

}

if (se->vmsd && !vmstate_save_needed(se->vmsd, se->opaque)) {

trace_savevm_section_skip(se->idstr, se->section_id);

continue;

}

trace_savevm_section_start(se->idstr, se->section_id);

json_start_object(vmdesc, NULL);

json_prop_str(vmdesc, "name", se->idstr);

json_prop_int(vmdesc, "instance_id", se->instance_id);

save_section_header(f, se, QEMU_VM_SECTION_FULL);

//重点实现原理的函数

vmstate_save(f, se, vmdesc);

trace_savevm_section_end(se->idstr, se->section_id, 0);

save_section_footer(f, se);

json_end_object(vmdesc);

}在vmstate_save中,vmstate_save_state针对vmsd做处理,前面已经讲过来具体处理机制,实际上就是把CPUX86State的值读取出来而已。

此刻,savevm命令可以完结了。

loadvm其实和savevm差不多,我也懒得写了,举一反三,自己看吧。

QEMU monitor savevm loadvm源代码分析来自于OenHan

链接为:https://oenhan.com/qemu-monitor-savevm-loadvm

牛逼!专业!

Hi

公司有个需求,需要在虚拟机运行状态下创建内部磁盘快照(不需要虚拟机内存状态),我的想法是写一个函数,和save_snapshot基本一样,只是不调用qemu_savevm_state,可行吗?有更好的方式吗?

@EDWARD_35 代码实现是可以的,有一个大问题是,不带内存,guest内的IO buffer丢了怎么办,文件系统一致性保证不了,这样做的磁盘快照文件系统很容易crash掉了,有什么意义呢?

目前在做虚拟机动态迁移方面的内容,具体就是在虚拟机动态迁移过程中要附加自己的一些信息在里面一起传输到目的端,假设使用 TCP传输。我看了qemu动态迁移源码,它迁移分为三步:

qemu_savevm_state_begin

qemu_savevm_state_iterate 循环迭代

qemu_savevm_state_complete

在这三步里面都是把要传输的数据写到QEMUFile结构体里面,然后传输过去。

我的问题如下:

传输的过程中有一些宏

#define QEMU_VM_EOF 0x00

#define QEMU_VM_SECTION_START 0x01

#define QEMU_VM_SECTION_PART 0x02

#define QEMU_VM_SECTION_END 0x03

#define QEMU_VM_SECTION_FULL 0x04

#define QEMU_VM_SUBSECTION 0x05

会被写入文件,我不太清楚这些宏的含义,就是什么时候该把这样一个标志写入QEMUFile结构体中?

然后在接收过程中处理时,为什么把QEMU_VM_SECTION_START, QEMU_VM_SECTION_FULL放在一组里面进行处理(源码在Savevm.c qemu_loadvm_state())?

希望博主指点一下,谢谢。

@朱康 QEMU_VM_SECTION_START是往QEMUFile中保存setup内容,QEMU_VM_SECTION_PART是迭代的内容,QEMU_VM_SECTION_END是迭代结束的内容,使用前面这三个主要是memory和block,是通过收敛逐渐完成迁移的,QEMU_VM_SECTION_FULL是设备迁移的内容,是一次性全部copy完成迁移的,QEMU_VM_SUBSECTION是子域的内容,对于qemu_loadvm_state_main而言,QEMU_VM_SECTION_START和QEMU_VM_SECTION_FULL地位是一样的,后面都是一个设备的迁移信息的头部。

@OENHAN 谢谢您的指导,还想问一下您在回复中提到的block迁移和QEMU_VM_SECTION_FULL阶段设备迁移的区别是在哪里呢?

@朱康 block和memory都是迭代过程中一步步收敛迁移,其他大部分都是QEMU_VM_SECTION_FULL

register_savevm(NULL, “hookapi”, 0, 2, hookapi_save, hookapi_load, NULL);

使用register_savevm注册的函数hookapi_save和hookapi_load,在什么条件下会被调用呢?

没太看明白您博客中关于register_savevm的解释,能麻烦您再详细解释一下吗,谢谢

@朱康 这是很老的代码了,这两个函数在迁移或者snapshot时执行,hookapi_save在迁移时源VM执行,hookapi_load在目的VM加载时执行